Prometheus基于Consul服务发现

一、环境

| 主机名 | IP地址 | 系统 | 说明 |

|---|---|---|---|

| localhost | 192.168.224.11 | Centos7.6 | docker安装的prometheus |

| server2.com | 192.168.224.12 | Centos7.6 |

二、基于 Consul 的服务发现

Consul 是由 HashiCorp 开发的一个支持多数据中心的分布式服务发现和键值对存储服务的开源软件,是一个通用的服务发现和注册中心工具,被大量应用于基于微服务的软件架构当中。

我们通过api将exporter服务注册到 Consul,然后配置 Prometheus 从 Consul 中发现实例。关于 Consul 本身的使用可以查看官方文档https://learn.hashicorp.com/consul了解更多。

1、二进制安装配置 Consul(二选一)

在页面https://www.consul.io/downloads下载符合自己系统的安装文件,比如我们这里是 Linux 系统,使用下面命令下载安装即可:

1 | wget https://releases.hashicorp.com/consul/1.14.5/consul_1.14.5_linux_amd64.zip |

启动consul

为了查看更多的日志信息,我们可以在 dev 模式下运行 Consul,如下所示:

1 | consul agent -dev -client 0.0.0.0 |

启动命令后面使用 -client 参数指定了客户端绑定的 IP 地址,默认为 127.0.0.1

2、docker安装Consul二选一)

docker运行

1 | docker run -d --name consul -p 8500:8500 consul:1.14.5 |

检查

1 | docker ps |

3、consul http访问地址

1 | http://192.168.224.11:8500/ui/dc1/services |

4、通过api注册到Consul

使用命令行注册

1 | curl -X PUT -d '{"id": "node1","name": "node_exporter","address": "node_exporter","port": 9100,"tags": ["exporter"],"meta": {"job": "node_exporter","instance": "Prometheus服务器"},"checks": [{"http": "http://192.168.224.11:9100/metrics", "interval": "5s"}]}' http://localhost:8500/v1/agent/service/register |

把json数据放在文件中,使用这个json文件注册

1 | mkdir /data/consul |

使用json文件注册

1 | curl --request PUT --data @node_exporter.json http://localhost:8500/v1/agent/service/register |

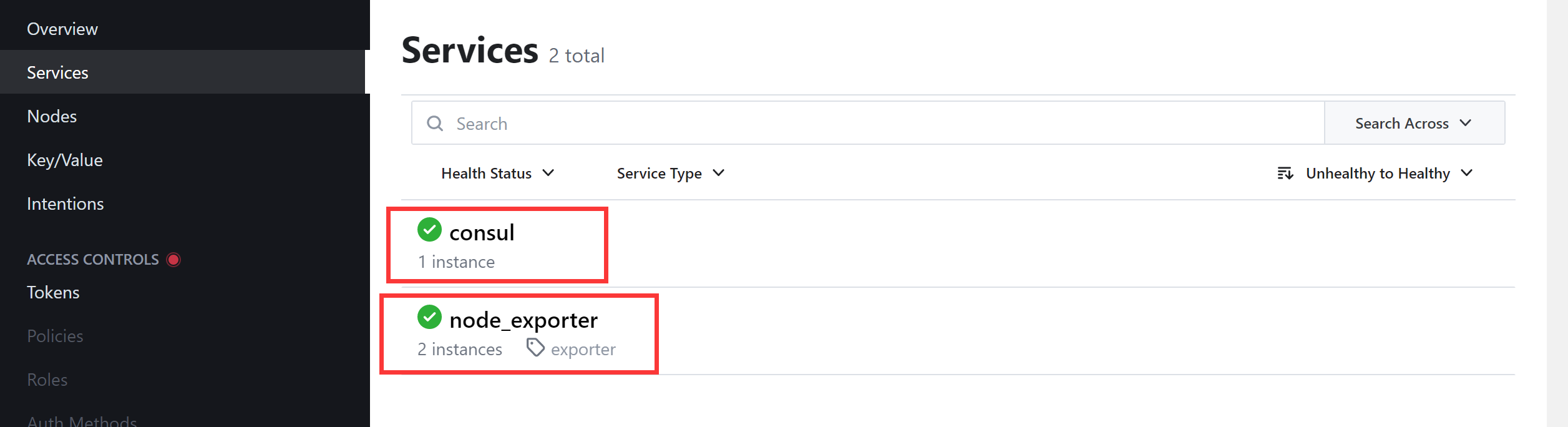

除了我们注册的 2 个 demo 服务之外,Consul agent 还会将自己注册为一个名为 consul 的服务,我们可以在浏览器中访问 http://192.168.224.11:8500 查看注册的服务。

在 Consul UI 页面中可以看到有 consul 和 node_exporter 两个 Service 服务。

5、配置 Prometheus

上面我们通过 Consul 注册了 2 个 node_exporter 服务,接下来我们将配置 Prometheus 通过 Consul 来自动发现 node_exporter服务。

在 Prometheus 的配置文件 prometheus.yml 文件中的 scrape_configs 部分添加如下所示的抓取配置:

备份源文件

1 | cd /data/docker-prometheus |

使用cat去掉之前的配置,使用下面的配置

1 | cat > prometheus/prometheus.yml<<"EOF" |

通过 consul_sd_configs 配置用于自动发现的 Consul 服务地址,服务名为[],我们通过relabel_configs的过滤规则只接收指定的exporter

1 | curl -X POST http://localhost:9090/-/reload |

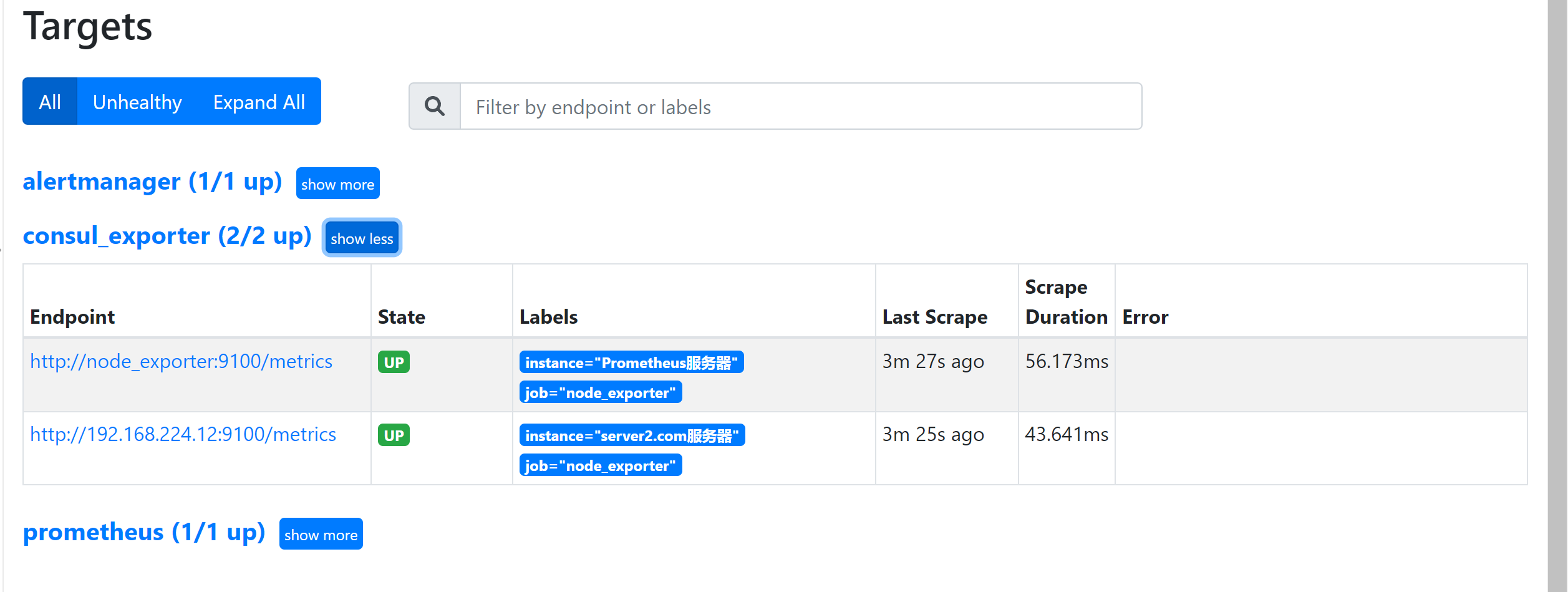

配置完成后重新启动 Prometheus,然后重新查看 Prometheus 页面上的 targets 页面,验证上面的配置是否存在:

1 | http://192.168.224.11:9090/targets |

正常情况下是可以看到会有一个 exporter 的任务,下面有 2 个自动发现的抓取目标。

6、创建添加脚本

使用预先准备好的脚本,一次添加多个targets:

1 | cat >/data/consul/api.sh <<"EOF" |

执行脚本

1 | sh /data/consul/api.sh |

检查

1 | http://192.168.224.11:9090/targets |

7、consul删除服务

1 | curl --request PUT http://127.0.0.1:8500/v1/agent/service/deregister/ID |

8、问题

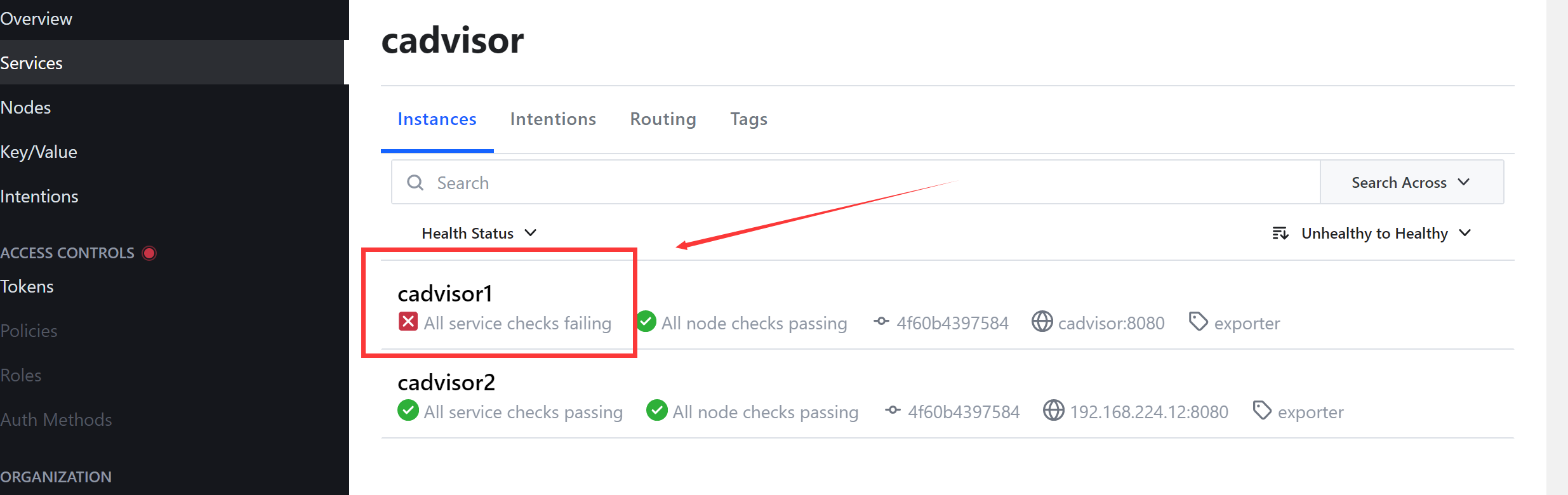

consul健康检查失败如下图:

原因:

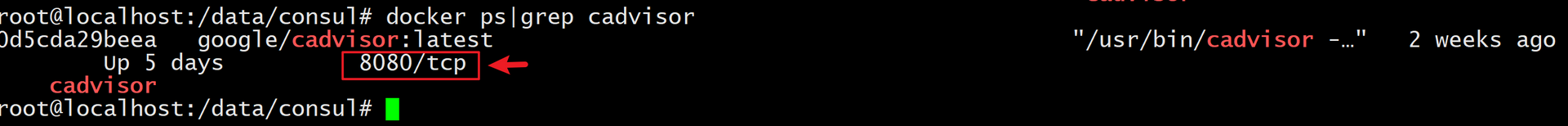

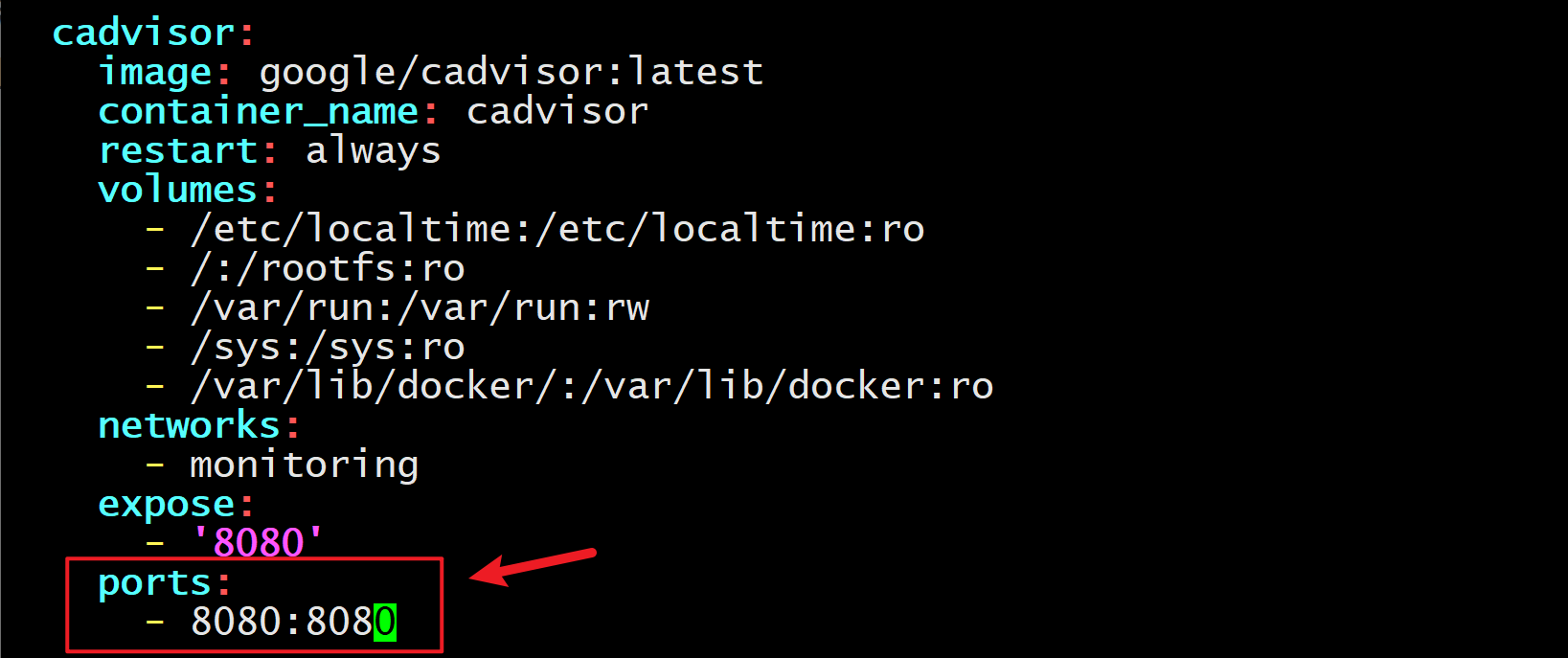

是因为并没有把8080映射出来(下图显示),导致consul监控检查不通过,所以报错。

解决

修改docker-compose.yaml文件把8080端口映射出来,就好了,如下图:

修改完成后,执行命令

1 | docker-compose up -d |

三、ConsulManager

1、ConsulManager需要依赖Consul,请先完成Consul的部署。(暂时最高支持Consul v1.14.5)(docs/Consul部署说明.md)

2、使用docker-compose来部署ConsulManager

下载:

wget https://starsl.cn/static/img/docker-compose.yml(仓库根目录下docker-compose.yml)编辑:

1

vim docker-compose.yml

修改3个环境变量:

**

consul_token**:consul的登录token(如何获取?),当然也可以不获取token,这样consul使用无密码登录(不安全)。**

consul_url**:consul的URL(http开头,/v1要保留)**

admin_passwd**:登录ConsulManager Web的admin密码启动:

docker-compose pull && docker-compose up -d访问:

http://192.168.224.11:1026/,使用配置的变量admin_passwd登录安装使用中遇到问题,请参考:FAQ

- 本文标题:Prometheus基于Consul服务发现

- 本文作者:yichen

- 本文链接:https://yc6.cool/2023/05/09/基于_Consul_的服务发现/

- 版权声明:本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明出处!